Model Predictions with Recurrent Neural Networks (RNN)

Since V5.1, ASCMO-DYNAMIC offers the possibility to use Recurrent Neural Networks RNN in the following for transient modeling. Models that use the RNN method can be exported to all available export formats.

The underlying basis for this new model type is the open-source machine learning platform Tensorflow.

RNNs are distinct from traditional neural networks, such as simple feed-forward networks like the single hidden layer network that can be found in one of the ASCMO-MOCA example projects. In contrast to such so-called perceptrons, RNN cells maintain a state that is reused, in addition to trainable weights and biases. This state is updated when an RNN is looking at an input, and it is made available to the cell when looking at the next input handed to it. Consequently, the cell can be thought of as having memory, since the results of previous evaluations of inputs can now influence the results of input evaluations at later times. Therefore, RNNs are very interesting candidates for building models from sequential data.

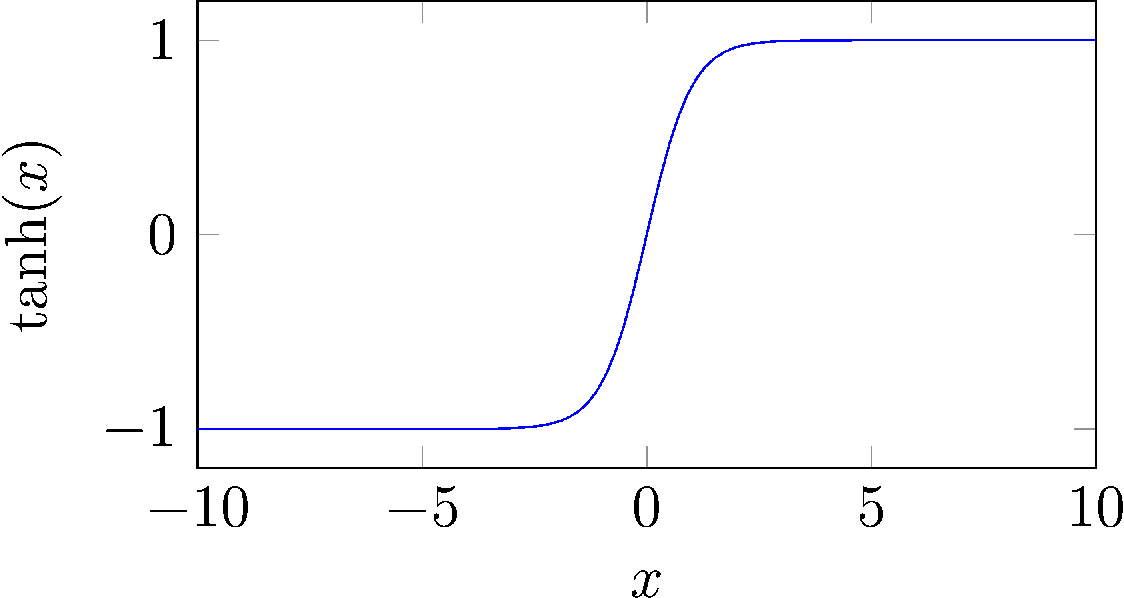

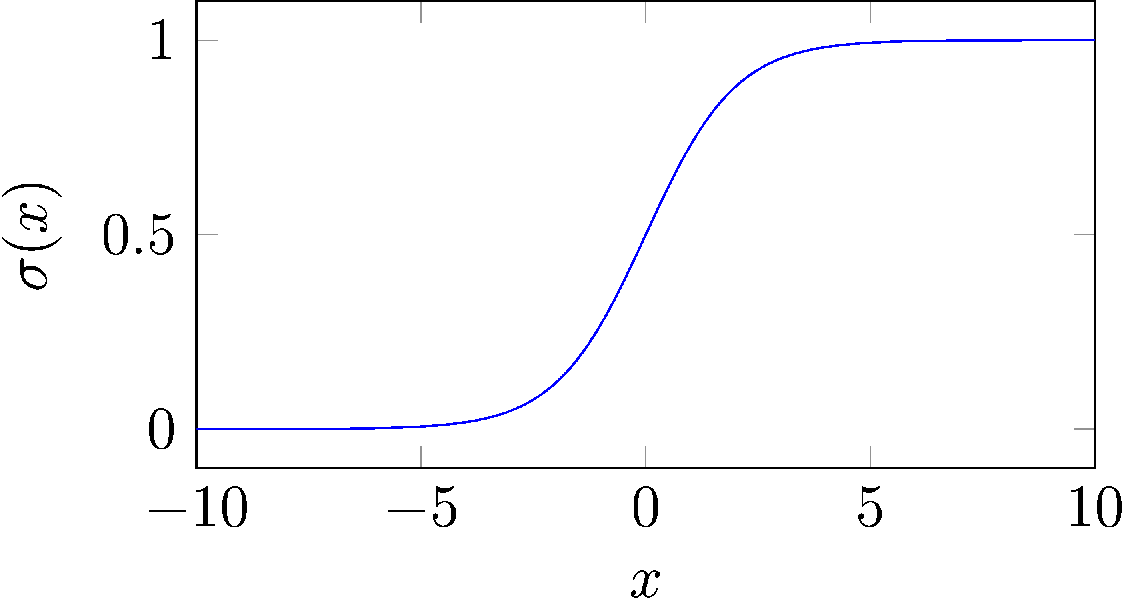

Nevertheless, RNNs share the underlying mechanisms with their non-recurrent relatives. They are organized in layers, where each layer consists of the aforementioned weights and biases, which are modified by activation functions. Here we introduces two of these functions, which are visualized in Fig. 15.

|

|

|

|

|

a) hyperbolic tangent |

|

b) sigmoid function |

Fig. 15: Neural network layers commonly consist of a higher-dimensional numerical array and a function modifying the contents of this array before handing it to the next layer or outputting. These functions are typically called activation functions. Two of these functions are shown in this figure: a) the hyperbolic tangent (tanh), and b) the sigmoid function (σ). The sigmoid function can be expressed with the help of tanh as σ(x) = (1 + tanh(x/2))/2.

RNNs come in different forms. A subset of them is now available for the model-building part in ASCMO-DYNAMIC. Specifically, three different classes of RNN cells can be used:

Each description contains a schematic visualization of its corresponding cell. In these diagrams, arrows → describe the flow of data, and nodes symbolize operations on this data. Nodes are distinguished by their shape: rectangular nodes depict inner cell layers, and circular nodes depict point-wise operations on data handed to them. One exception to this rule is the inner state of LSTM cells, which will be addressed explicitly.

See also

Model Configurations: Recurrent Neural Network (RNN)

Model Configurations (ASCMO-DYNAMIC)